Autonomous camera traps for insects provide a tool for long-term remote monitoring of insects. These systems bring together cameras, computer vision, and autonomous infrastructure such as solar panels, mini computers, and data telemetry to collect images of insects.

With increasing recognition of the importance of insects as the dominant component of almost all ecosystems, there are growing concerns that insect biodiversity has declined globally, with serious consequences for the ecosystem services on which we all depend.

Automated camera traps for insects offer one of the best practical and cost-effective solutions for more standardised monitoring of insects across the globe. However, to realise this we need interdisciplinary teams who can work together to develop the hardware systems, AI components, metadata standards, data analysis, and much more.

This WILDLABS group has been set up by people from around the world who have individually been tackling parts of this challenge and who believe we can do more by working together.

We hope you will become part of this group where we share our knowledge and expertise to advance this technology.

Check out Tom's Variety Hour talk for an introduction to this group.

Learn about Autonomous Camera Traps for Insects by checking out recordings of our webinar series:

- Hardware design of camera traps for moth monitoring

- Assessing the effectiveness of these autonomous systems in real-world settings, and comparing results with traditional monitoring methods

- Designing machine learning tools to process camera trap data automatically

- Developing automated camera systems for monitoring pollinators

- India-focused projects on insect monitoring

Meet the rest of the group and introduce yourself on our welcome thread - https://www.wildlabs.net/discussion/welcome-autonomous-camera-traps-insects-group

Group curators

- @tom_august

- | he/him

Computational ecologist with interests in computer vision, citizen science, open science, drones, acoustics, data viz, software engineering, public engagement

- 6 Resources

- 49 Discussions

- 5 Groups

- 7 Resources

- 2 Discussions

- 5 Groups

DIY electronics for behavioral field biology

- 3 Resources

- 59 Discussions

- 4 Groups

- @ARobillard

- | He/Him

A conservation data scientist and field ecologist with broad interest in the application of machine learning and population genetics to the conservation of threatened species. Alex has conducted field studies throughout central and south America, the Caribbean, and North America.

- 1 Resources

- 0 Discussions

- 7 Groups

https://www.songquanong.com/

- 0 Resources

- 0 Discussions

- 5 Groups

- 7 Resources

- 2 Discussions

- 5 Groups

- @ahmedjunaid

- | He/His

Zoologist, Ecologist, Conservation Biologist

- 0 Resources

- 0 Discussions

- 16 Groups

- @rays45693

- | he/him

PhD Student in Computer Science at Rensselaer Polytechnic Institute, working on wildlife conservation using deep learning

- 0 Resources

- 0 Discussions

- 4 Groups

- 0 Resources

- 0 Discussions

- 13 Groups

Wildlife enthusiast who happens to run an embedded systems lab

- 0 Resources

- 7 Discussions

- 5 Groups

- 0 Resources

- 0 Discussions

- 2 Groups

- 0 Resources

- 0 Discussions

- 1 Groups

- @hannahrisser

- | She/her

CEH

- 0 Resources

- 0 Discussions

- 10 Groups

- @Mathilde

- | she/her

Natural Solutions

Engineer, I work for a web development company on web application projects for biodiversity conservation. I'm especially interested by camera traps, teledetection and DeepLearning subjects.

- 0 Resources

- 0 Discussions

- 11 Groups

The AMI team in montreal is looking for a paid intern to do machine vision stuff!

3 July 2024

Come and do the first research into responsible AI for biodiversity monitoring, developing ways to ensure these AIs are safe, unbiased and accountable.

11 June 2024

€4,000 travel grants are available for researchers interested in insect monitoring using automated cameras and computer vision

6 June 2024

WildLabs will soon launch a 'Funding and Finance' group. What would be your wish list for such a group? Would you be interested in co-managing or otherwise helping out?

5 June 2024

Do you have photos and videos of your conservation tech work? We want to include them in a conservation technology showcase video

17 May 2024

€2,000 travel grants are available for researchers interested in insect monitoring using automated cameras and computer vision

3 May 2024

Article

Read in detail about how to use The Inventory, our new living directory of conservation technology tools, organisations, and R&D projects.

1 May 2024

Article

The Inventory is your one-stop shop for conservation technology tools, organisations, and R&D projects. Start contributing to it now!

1 May 2024

The Smithsonian National Zoo & Conservation Biology Institute is seeking a Postdoctoral Research Fellow to help us integrate movement data & camera trap data with global conservation policy.

22 April 2024

We invite applications for the third Computer Vision for Ecology (CV4E) workshop, a three-week hands-on intensive course in CV targeted at graduate students, postdocs, early faculty, and junior researchers in Ecology...

12 February 2024

The primary focus of the research is to explore how red deer movements, space use, habitat selection and foraging behaviour change during the wolf recolonization process.

10 February 2024

Outstanding chance for a motivated and ambitious individual to enhance their current project support skills by engaging with a diverse array of exciting projects in the field of biodiversity science.

11 December 2023

September 2024

event

June 2024

event

February 2024

event

December 2023

event

| Description | Activity | Replies | Groups | Updated |

|---|---|---|---|---|

| Great resource!!! |

|

Autonomous Camera Traps for Insects | 2 weeks 1 day ago | |

| Congrats on the wonderful success. Keep it up |

|

Autonomous Camera Traps for Insects | 2 weeks 2 days ago | |

| Hi everyone, I am conducting a research project as part of my MSc in Environment and Development at the London School of Economics. I... |

|

Acoustics, AI for Conservation, Autonomous Camera Traps for Insects, Ethics of Conservation Tech | 3 weeks 2 days ago | |

| A fantastic solution @hikinghack ! Those Pijuice boards are more expensive than the pis themselves! It already sounds as if you've gotten the power use in sleep down a long... |

+2

|

Autonomous Camera Traps for Insects | 1 month 2 weeks ago | |

| I was thinking that you might be able to reduce the amount of time the lights were on by blinking them, but this paper seems to show that flickering mostly reduces the number of... |

|

Autonomous Camera Traps for Insects | 2 months 1 week ago | |

| Hey there @JakeBurton , sorry, I did not see your message! Why don't you shoot me an email with some tentative availability to luca.pegoraro (at) wsl.ch, and we take it from... |

|

Autonomous Camera Traps for Insects | 2 months 4 weeks ago | |

| Gotcha, well I look forward to seeing future iterations and following along with your progress!! |

|

Autonomous Camera Traps for Insects, AI for Conservation, Emerging Tech, Open Source Solutions | 3 months 3 weeks ago | |

| More cool things surrounding the Mothbox project keep happening! Here’s a recap of cool developments over the past month!New Teammate! Bri... |

|

Autonomous Camera Traps for Insects | 3 months 4 weeks ago | |

| Greetings Everyone, We are so excited to share details of our WILDLABS AWARDS project "Enhancing Pollinator Conservation through Deep... |

|

AI for Conservation, Autonomous Camera Traps for Insects | 4 months 1 week ago | |

| For our [mothbox project](https://forum.openhardware.science/c/projects/mothbox/73) we are programming pijuices and pis to automatically... |

|

Autonomous Camera Traps for Insects | 4 months 4 weeks ago | |

| Hi, I made a little utility script that folks here might find useful (or might have MUCH BETTER VERSIONS OF! and if so, let me know!) ... |

|

Autonomous Camera Traps for Insects | 5 months 2 weeks ago | |

| We did some more testing with the Mothbeam in the forest. It's the height of dry season right now, so not many moths came out, but the mothbeam shined super bright and attracted a... |

|

Autonomous Camera Traps for Insects | 6 months ago |

Update 3: Cheap Automated Mothbox

31 December 2023 9:37pm

5 January 2024 8:56pm

Thanks!!!

10 January 2024 11:33pm

Great work! I very much look forward to trying out the MothBeam light. That's going to be a huge help in making moth monitoring more accessible.

And well done digging into the picamera2 library to reduce the amount of time the light needs to be on while taking a photo. That is a super annoying issue!

Project support officer - Conservation Tech

11 December 2023 10:24pm

Project introductions and updates

2 August 2022 11:21am

29 March 2023 6:05pm

Hi all! I'm part of a Pollinator Monitoring Program at California State University, San Marcos which was started by a colleague lecturer of mine who was interested in learning more about the efficacy of pollinator gardens. It grew to include comparing local natural habitat of the Coastal Sage Scrub and I was initially brought on board to assist with data analysis, data management, etc. We then pivoted to the idea of using camera traps and AI for insect detection in place of the in-person monitoring approach (for increasing data and adding a cool tech angle to the effort, given it is of interest to local community partners that have pollinator gardens).

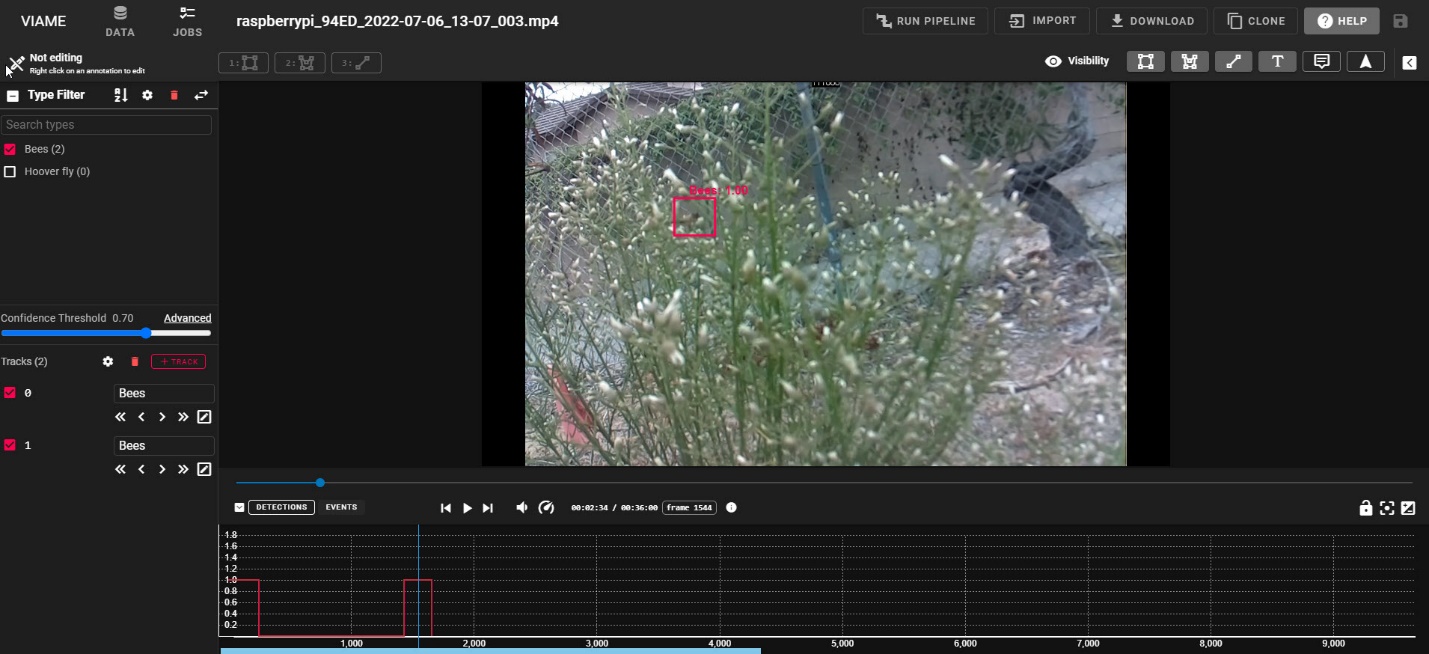

The group heavily involves students as researchers, and they are instrumental to the projects. We have settled on a combination of video footage and development of deep neural networks using the cloud-hosted video track detection tool, VIAME (developed by Kitware for NOAA Fisheries originally for fish track detection). Students built our first two PICTs (low-cost camera traps), and annotated the data from our pilot study that we are currently starting the process of network development for. Here's a cool pic of the easy-to-use interface that students use when annotating data:

Figure 1: VIAME software demonstrating annotation of the track of an insect in the video (red box). Annotations are done manually to develop a neural network for the automated processing.

The goal of the group's camera trap team is develop a neural network that can track insect pollinators associated with a wide variety of plants, and to use this information to collect large datasets to better understand the pollinator occurrence and activities with local habitats. This ultimately relates to native habitat health and can be used for long-term tracking of changes in the ecosystem, with the idea that knowledge of pollinators may inform resources and conservation managers, as well as local organizations in their land use practices. We ultimately are interested in working with the Kitware folks further to not only develop a robust network (and share broadly of course!), but also to customize the data extraction from automated tracks to include automated species/species group identification and information on interaction rate by those pollinators. We would love any suggestions for appropriate proposals to apply to, as well as any information/suggestions regarding the PICT camera or suggestions on methods. We are looking to include night time data collection at some point as well and are aware the near infrared is advised, but would appreciate any thoughts/advice on that avenue as well.

We will of course post when we have more results and look forward to hearing more about all the interesting projects happening in this space!

Cheers,

Liz Ferguson

5 April 2023 10:00pm

HI, indeed as Tom mentioned, I am working here in Vermont on moth monitoring using machines with Tom and others. We have a network going from here into Canada with others. Would love to catch up with you soon. I am away until late April, but would love to connect after that!

10 December 2023 5:10pm

The preprint to our camera trap paper is now available at bioRxiv.

Two-year postdoc in AI and remote sensing for citizen-science pollinator monitoring

4 December 2023 12:21pm

AWMS Conference 2023

Update 2: Cheap Automated Mothbox

23 October 2023 8:32pm

24 October 2023 9:05am

Hi Andrew,

thanks for sharing your development process so openly, that's really cool and boosts creative thinking also for the readers! :)

Regarding a solution for Raspberry Pi power management: we are using the PiJuice Zero pHAT in combination with a Raspberry Pi Zero 2 W in our insect camera trap. There are also other versions available, e.g. for RPi 4 (more info: PiJuice GitHub). From my experience the PiJuice works mostly great and is super easy to install and set up. Downsides are the price and the lack of further software updates/development. It would be really interesting if you could compare one of their HATs to the products from Waveshare. Another possible solution would be a product from UUGear. I have the Witty Pi 4 L3V7 lying around, but couldn't really test and compare it to the PiJuice HAT yet.

Is there a reason why you are using the Raspberry Pi 4? From what I understand about your use case, the RPi Zero 2 W or even RPi Zero should give enough computing power and require a lot less power. Also they are smaller and would be easier to integrate in your box (and generate less heat).

I'm excited for the next updates to see in which direction you will be moving forward with your Mothbox!

Best,

Max

25 October 2023 6:21pm

Thanks for these tips!

We are using a RPI4 because the people I am building it for want images from the 64MP camera from Arducam and so we have to use that to make that work.

27 October 2023 6:44am

Thanks a lot for this detailed update on your project! It looks great!

Entomological Research Specialist for Automated Insect Monitoring

25 October 2023 7:21pm

Cheap Automated Mothbox

31 August 2023 10:19pm

25 October 2023 5:04pm

I'm looking into writing a sketch for the esp32-cam that can detect pixel changes and take a photo, wish me luck.

25 October 2023 5:11pm

One question, does it even need motion detection? What about taking a photo every 5 seconds and sorting the photos afterwards?

25 October 2023 6:23pm

It depends on which scientists you talk to. I am an favor of just doing a timelapse and doing a post-processing sort afterwards. There's not much reason i can see for such motion fidelity. For the box i am making we are doing exactly that, though maybe a photo every minute or so

Metadata standards for Automated Insect Camera Traps

24 November 2022 9:49am

2 December 2022 3:58pm

Yes. I think this is really the way to go!

6 July 2023 4:48am

Here is another metadata initiative to be aware of. OGC has been developing a standard for describing training datasets for AI/ML image recognition and labeling. The review phase is over and it will become a new standard in the next few weeks. We should consider its adoption when we develop our own training image collections.

24 October 2023 9:12am

For anyone interested: the GBIF guide Best Practices for Managing and Publishing Camera Trap Data is still open for review and feedback until next week. More info can be found in their news post.

Best,

Max

Automated moth monitoring & you!

24 October 2023 8:52am

Catch up with The Variety Hour: October 2023

19 October 2023 11:59am

Q&A: UK NERC £3.6m AI (image) for Biodiversity Funding Call - ask your questions here

13 September 2023 4:10pm

21 September 2023 4:27pm

This is super cool! Me and @Hubertszcz and @briannajohns and several others are all working towards some big biodiversity monitoring projects for a large conservation project here in panama. The conservation project is happening already, but hubert starts on the ground work in January and im working on a V3 of our open source automated insect monitoring box to have ready for him by then.

I guess my main question would be if this funding call is appropriate/interested for this type of project? and what types of assistance are possible through this type of funding (researchers? design time? materials? laboratory field construction)

Wisconsin - Insect Light Trap

18 September 2023 5:34am

Best Material for Moth Lighting?

9 September 2023 1:46am

9 September 2023 5:22am

Plasticy substances like polyester can be slippery, so I imagine that's why cotton is most often used. White is good for color correction, while still reflecting light pretty well. When I've had the option I've chosen high thread count cotton sheets, so the background is smoothest and even the tiniest arthropods are on a flat background, not within contours of threads. Main problem with cotton is mildew and discoloration.

That being said, I haven't actually done proper tests with different materials. Maybe a little side project once standardized light traps are a thing?

Improving the generalization capability of YOLOv5 on remote sensed insect trap images with data augmentation

31 August 2023 8:29am

Interesting new methods to help improve insect detection

"...this paper proposes three previously unused data augmentation approaches (gamma correction, bilateral filtering, and bit-plate slicing) which artificially enrich the training..."

360 Camera for Marine Monitoring

25 July 2023 8:54am

28 July 2023 7:43pm

Hi Sol,

For my research on fish, I had to put together a low-cost camera that could record video for several weeks. Here is the design I came up with

At the time of the paper, I was able to record video for ~12 hours a day at 10 fps and for up to 14 days. With new SD cards now, it is pushed to 21 days. It costs about 600 USD if you build it yourself. If you don't want to make it yourself, there is a company selling it now, but it is much more expensive. The FOV is 110 degrees, so not the 360 that you need, but I think there are ways to make it work (e.g. with the servo motor).

Happy to chat if you decide to go this route and/or want to brainstorm ideas.

Cheers,

Xavier

3 August 2023 2:32am

Hi Xavier, this is fantastic! Thanks for sharing, the time frame is really impressive and really in line with what we're looking for. I'll send you a message.

Cheers,

Sol

3 August 2023 3:19am

I agree, this would be great for canopy work!

European Forum Alpbach

1 August 2023 5:23pm

Insect camera traps for phototactic insects and diurnal pollinating insects

20 March 2023 9:39am

25 May 2023 7:09am

OK, thanks!

10 July 2023 1:57pm

Hi @abra_ash , @MaximilianPink, @Sarita , @Lars_Holst_Hansen.

I'm looking to train a very compact (TinyML) model for flying pollinator detection on a static background. I hope a network small enough for microcontroller hardware will prove useful for measuring plant-pollinator interactions in the field.

Presently, I'm gathering a dataset for training using a basic motion-triggered video-capture program on a raspberry pi. This forms a very crude insect camera trap.

I'm wondering if anyone has any insights on how I might attract pollinators into my camera field of view? I've done some very elementary reading on bee optical vision and currently trying the following:

Purple and yellow artifical flowers are placed on a green background, the center of the flowers are lightly painted with a UV (365nm) coat.

A sugar paste is added to each flower.

The system is deployed in an inner-city garden (outside my flat), and I regularly see bees attending the flowers nearby.

Here's a picture of the field of view:

Does anyone have ideas for how I might maximise insect attraction? I'm particularly interested in what @abra_ash and @tom_august might have to say - are optical methods enough or do we need to add pheremone lures?

Thanks in advance!

Best,

Ross

20 July 2023 4:40pm

Hi Ross,

Where exactly did you put the UV paint? Was it on the petals or the actual middle of the flowers?

I would recommend switching from sugar paste to sugar water and maybe put a little hole in the centre for a nectary. Adding scent would make the flowers more attractive but trying to attract bees is difficult since they very obviously prefer real flowers to artificial ones. I would recommend getting the essential oil Linalool since it is a component of scented nectar and adding a small amount of it to the sugar water. Please let us know if the changes make any difference!

Kind Regards,

Abra

Welcome to the Autonomous camera traps for insects group!

1 August 2022 10:52am

9 May 2023 12:19pm

Hi Peter,

EcoAssist looks really cool! It's great that you combined every step for custom model training and deployment into one application. I will take a deeper look at it asap.

Regarding YOLOv5 insect detection models:

- Bjerge et al. (2023) released a dataset with annotated insects on complex background together with three YOLOv5 models at Zenodo.

- For a DIY camera trap for automated insect monitoring, we published a dataset with annotated insects on homogeneous background (flower platform) at Roboflow Universe and at Zenodo. The available models that are trained on this dataset are converted to .blob format for deployment on the Luxonis OAK cameras. If you are interested, I could train a YOLOv5 model with your preferred parameters and input size and send it to you in PyTorch format (and/or ONNX for CPU inference) to include in your application. Of course you can also use the dataset to train the model on your own.

Best,

Max

11 May 2023 4:59pm

Hi Max, thanks for your reply! I'll have a look and come back to you.

19 July 2023 11:08am

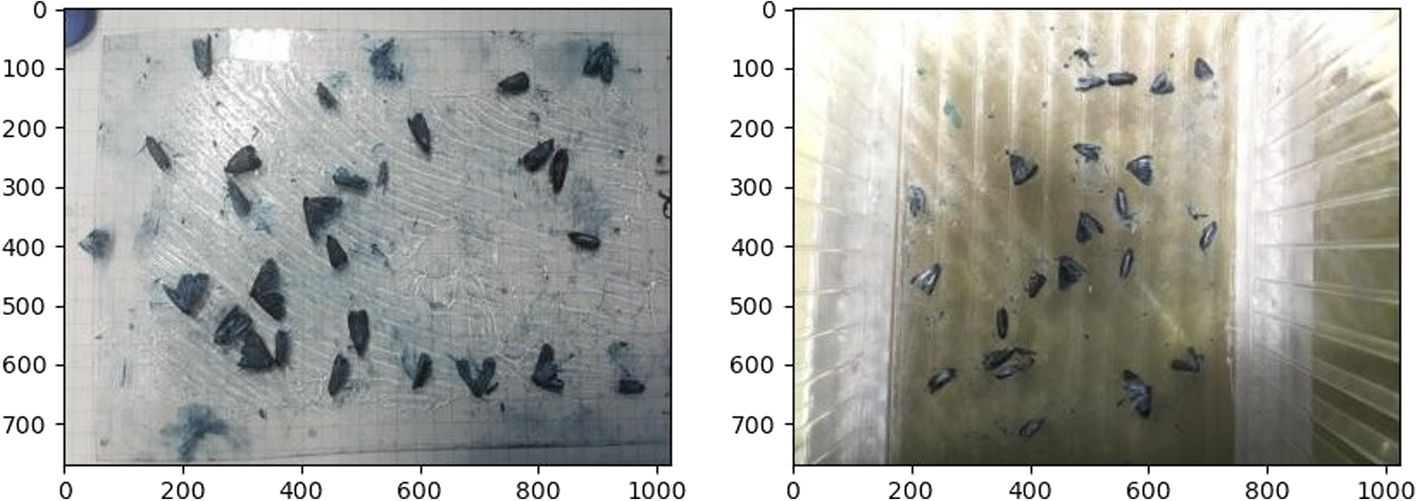

Greetings, everyone! I'm thrilled to join this wonderful community. I work as a postdoctoral researcher at MeBioS KU Leuven having recently completed my PhD on "Optical insect identification using Artificial Intelligence". While our lab primarily focuses on agricultural applications, we're also eager to explore biodiversity projects for insect population estimation, which provides crucial insights into our environment's overall health.

Our team has been developing imaging systems that leverage Raspberry Pi's, various camera models, and sticky traps to efficiently identify insects. My expertise lies in computer science and machine learning, and I specialize in building AI classification models for images and wingbeat signals. I've worked as a PhD researcher at a Neurophysiology lab in the past, as well as a Data Scientist at an applied AI company. You can find more about me by checking my website or my linkedin.

Recently, I've created a user-friendly web-app (Streamlit) which is hosted on AWS (FastAPI) that helps entomology experts annotate insect detections to improve our model's predictions. You can find some examples of this work here: [link1] and [link2]. And lastly, for anyone interested in tiling large images for object detection or segmentation purposes in a fast and efficient way, please check my open-source library "plakakia".

I'm truly excited to learn from and collaborate with fellow members of this forum, and I wish you all the best with your work!

Yannis Kalfas

Tools for automating image analysis for biodiversity monitoring

UK Research and Innovation

UK Research and Innovation

12 July 2023 4:50pm

New collaboration network - Computer vision for insects

26 June 2023 2:36pm

The Wildlife Society Conference

19 June 2023 5:59am

Wildlife Monitoring Engineer

8 June 2023 4:54pm

Capture And Identify Flying Insects

30 December 2022 7:34pm

3 January 2023 1:55pm

This sounds like an interesting challenge. I think depth of focus and shutter speeds are going to be challenging. You'll need a fast shutter speed to be able to get shape images of insects in flight. Are you interested in species ID or are you more interested in abundance. having a backboard on the other side of the hotel would be a good idea to remove background clutter from your images.

19 May 2023 8:18am

Hi there,

I am also trying to get some visuals from wildlife cameras of insects visiting insect hotels. Was wondering if you had gained any further information on which cameras might be used for testing this?

Postdoc for image-based insect monitoring with computer vision and deep learning

9 May 2023 12:27pm

What is the best light for attracting moths?

17 October 2022 3:12pm

13 January 2023 12:33pm

We have also thought about these sorts of things. We have chosen to keep the light on continuously for the night, but turn it off before dawn to allow the moths to fly away before predators arrive.

We are going to be trying out the EntoLEDs and LepiLEDs in Panama in the last two weeks of January, I'll post here on my thoughts.

15 April 2023 9:15pm

We are testing several cheap 365 +395 nm Bright LEDs here in Panama over the next month

5 May 2023 4:59pm

Would be great to hear more. We found that the lepiLED was great! The ento mini did not attract as much, but if compensated with many nights of deployment it would probably work okay.

Hack a momentary on-off button

15 April 2023 9:21pm

21 April 2023 2:30pm

Hi @hikinghack ,

If I am understanding correctly, you want to be able to have the UV lights come on and go off at a certain time (?) and emulate the button push which actually switches them on and off? Is the momentary switch the little button at the top of the image you attached? Is it going to be cotrolled by a timer or a microcontroller at all? Sorry for all the questions, but I am not 100% clear on exactly what you are after. In the meantime, I've linked to a pretty decent tutorial on the process of hacking a momentary switch with a view to automating it with an Arduino microcontroller board, although it sort of assumes a bit of knowledge of electronics (e.g. MOSFETS/transistors) in certain places.

Alternatively, this tutorial is also good, with good explanations throughout:

If neither of these help, let me know and there might be some easier instructions I can put together.

All the best,

Rob

22 April 2023 3:04am

Hi Andrew,

If I understand you correctly, you want to turn on the LEDs when USB power is applied. The easiest way I can see to do this is to reroute the red wire to USBC VBUS, via an appropriate current limiting resistor. This bypasses all the electronics in your photo.

You could insert the current limiting resistor in the USB cable for better heat dissipation, or use a DC-DC constant current source instead of a resistor if power consumption is a concern.

22 April 2023 7:45am

Further to @htarold 's excellent suggestion, you can replace that entire PCB with a simple USB breakout board (e.g. USB micro attached below) by removing the red wire and attaching it to VCC on the breakout board, and removing and attaching the black wire to GND.

Camera traps, AI, and Ecology

14 April 2023 10:08am

7 June 2023 9:42am

1 August 2023 10:46am

1 September 2023 8:06am

Scaling up insect monitoring using computer vision

6 April 2023 7:06pm

Who's going to ESA in Portland this year?

31 March 2023 9:27am

4 April 2023 9:58am

That sounds great. I think you should encourage people to bring a bit of tech with them, can be a good conversation starter/ice-breaker

4 April 2023 4:04pm

Good idea! I've got a ransom assortment of different acoustic recorders I can bring along

5 April 2023 11:58pm

Indeed, I'll be there too! I like to meet new conservation friends with morning runs, so I will likely organize a couple of runs, maybe one right near the conference, and one somewhere in a nearby park where we can look for wildlife. The latter would probably be at an obscenely early hour, so we can drive somewhere, ideally see elk (there are elk within 25 minutes of Portland!), and still get back in time for the morning sessions.

1 January 2024 9:18pm

This looks amazing! I'm currently work with hastatus bats up in Bocas, it would be really interesting to utilize some of these near foraging sites. Be sure to post again when you post the final documentation on github!

Also, Gamboa......dang I miss that little slice of heaven...

Super cool work Andrew!

Best,

Travis